As InfoSec Architect, part of my job is to review formal documentations provided by cloud provider (aws in this case) and make sure the proposed solution comply with security standards and there are enough security controls to safeguard the SaaS/PaaS solutions. Sometimes you don’t get enough clarification from provider documentations and you have to rely on Google or Stackoverflow! However, you can’t take them with grain of salts and you have to validate security controls indeed can be implemented. AWS SFTP is one such new but no so new service where majority of the documentation is around aws managed identity provider and there is not much clarity on Custom Identity Provider. On top of it, how to make use of key based authentication as opposed to username/password basic authentication. Let’s do some learning with hands-on practice so we leave no doubt on security questions!

Where do we start so we can keep our focus to identity, authentication, logging, monitoring, etc.? Working with identity providers is where I started because it comes with ready to go Cloud Formation yaml script. You will be able to provision all the necessary components- sftp server, api gateway, lambda, iam role/policy in few minutes. This template does not make the resources private (vpc joined) but that’s not our focus for this exercise. Let’s leave them to your engineering team! Yea, you get the solution up but it does not work the way you wish and the struggle starts here!

It’s lambda function where we would be spending most of our time because it (lambda) is responsible for security (authentication/authorization). It’s a bridge between aws sftp service and real identity provider. For our learning, we will make those authn/authz decision in the lambda it self. My initial attempt to use username/password based authentication did not work due to lack of permissions defined in the role arn used in lambda response object. Let me share lambda codes and we will walk through the lines need attention-

'use strict';

// GetUserConfig Lambda

exports.handler = (event, context, callback) => {

console.log("Username:", event.username, "ServerId: ", event.serverId);

var response;

var inlinepolicy = '{ "Version": "2012-10-17", "Statement": [ { "Sid": "VisualEditor0", "Effect": "Allow", "Action": "s3:ListBucket", "Resource": "arn:aws:s3:::${transfer:HomeBucket}", "Condition": { "StringLike": { "s3:prefix": [ "${transfer:Username}/", "${transfer:Username}" ] } } }, { "Sid": "VisualEditor2", "Effect": "Allow", "Action": [ "s3:PutObject", "s3:GetObjectAcl", "s3:GetObject", "s3:DeleteObjectVersion", "s3:DeleteObject", "s3:PutObjectAcl", "s3:GetObjectVersion" ], "Resource": [ "arn:aws:s3:::${transfer:HomeDirectory}", "arn:aws:s3:::${transfer:HomeDirectory}" ] } ]}';

// Check if the username presented for authentication is correct. This doesn't check the value of the serverId, only that it is provided.

if (event.serverId !== "" && event.username == 'myuser1') {

response = {

Role: 'arn:aws:iam::862832033224:role/AllowTransferServiceTo3', // The user will be authenticated if and only if the Role field is not blank

Policy: inlinepolicy, // Optional JSON blob to further restrict this users permissions

HomeDirectory: '/aspnet4you-sftp-s3/myuser1/',

HomeBucket: 'aspnet4you-sftp-s3',

HomeFolder: 'myuser1'

};

// Check if password is provided if (event.password == "") { // If no password provided, return the user's SSH public key response['PublicKeys'] = [ 'ssh-rsa replace-with-ssh-public-key-in-one-line' ]; // Check if password is correct } else if (event.password !== 'replace-with-your-password') { // Return HTTP status 200 but with no role in the response to indicate authentication failure response = {}; }

} else {

// Return HTTP status 200 but with no role in the response to indicate authentication failure

response = {};

}

callback(null, response);

};

SFTP works with underlying S3 buckets but the template didn’t create one. You will need to create a bucket with folder structure based on username. Role arn is important because it allows sftp service (transfer.amazonaws.com) to access S3 bucket(s) based on the policy configures. We could have created policy resource separately but we decided to use inline policy to keep things together. Here is the policy applied to arn:aws:iam::862832033224:role/AllowTransferServiceTo3 role. You can find documentation at Use an IAM policy to control access to AWS Transfer Family. If you are using cmk to encrypt the bucket, be sure to configure the policy to allow access to keys.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:ListBucket",

"s3:GetBucketLocation"

],

"Resource": [

"arn:aws:s3:::aspnet4you-sftp-s3"

]

},

{

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:DeleteObject",

"s3:DeleteObjectVersion",

"s3:GetObjectVersion",

"s3:GetObjectACL",

"s3:PutObjectACL"

],

"Resource": [

"arn:aws:s3:::aspnet4you-sftp-s3/*"

]

}

]

}

The policy above is very basic and it does not provide fine grained permission to limit the users to their respective folder(s). We need to implement scope-down policy. A scope-down policy is an AWS Identity and Access Management (IAM) policy that restricts users to certain portions of an Amazon S3 bucket. It does so by evaluating access in real time. You can’t use scope-down policy in IAM role- take important note. Let’s define our scope-down policy. I did it in notepad and tried to copy multi-line json into a variable. No luck, you end up with – Parsing error- undeterminated string constant! Tried to escape the lines but I gave up after spending some time. Ended up removing the new line characters and it’s not good for reading! I am sure some developers are smart and they will figure it out!

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": "s3:ListBucket",

"Resource": "arn:aws:s3:::${transfer:HomeBucket}",

"Condition": {

"StringLike": {

"s3:prefix": [

"${transfer:UserName}/*", "${transfer:UserName}" ] } } }, { "Sid": "VisualEditor2", "Effect": "Allow", "Action": [ "s3:PutObject", "s3:GetObjectAcl", "s3:GetObject", "s3:DeleteObjectVersion", "s3:DeleteObject", "s3:PutObjectAcl", "s3:GetObjectVersion" ], "Resource": [ "arn:aws:s3:::${transfer:HomeDirectory}*",

"arn:aws:s3:::${transfer:HomeDirectory}"

]

}

]

}

As you can see this scope-down policy is variables driven and this is where I had to spend good amount of time to get it right! In real-world, you will get the following authorization/entitlements from identity provider but we hard coded them to keep things simple. You can use string interpolation to define user from ${transfer.UserName}.

- HomeDirectory: ‘/aspnet4you-sftp-s3/myuser1/’,

- HomeBucket: ‘aspnet4you-sftp-s3’,

- HomeFolder: ‘myuser1’

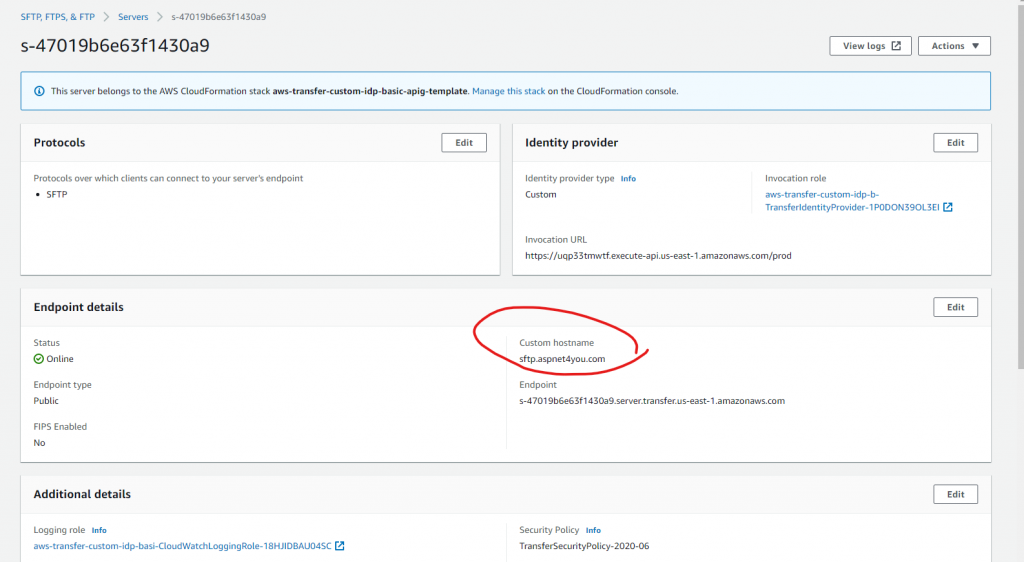

Okay, that should be good enough to connect to sftp service with username and password. We are going to use this approach just to make sure things works before jumping on to key based authentication. By the way, I added custom domain (sftp.aspnet4you.com) to make the sftp domain look nice.

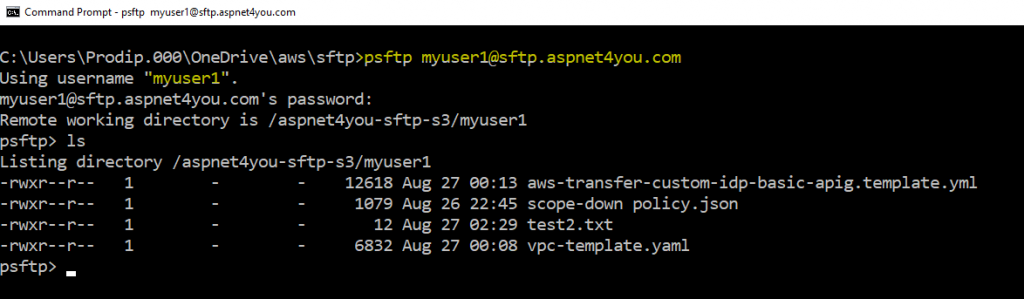

We are going to use psftp tool to connect to sftp service endpoint at sftp.aspnet4you.com.

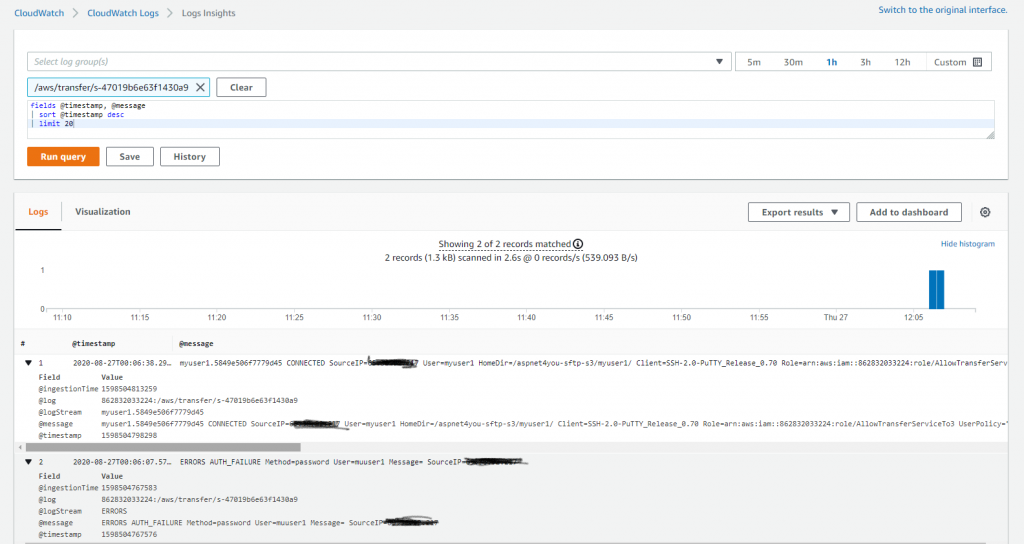

We talked about logging and monitoring. Let’s check if we can verify login activities. If you noticed carefully, I typed the username incorrectly and got authentication error.

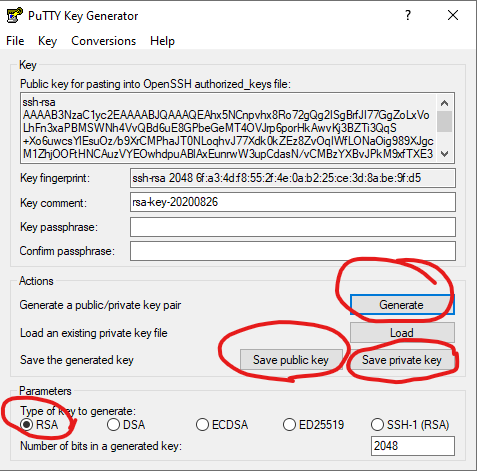

It’s time for key based authentication. What kind of keys are supported? Looks like aws supports SSH public key. That’s what we are going to try. We will use Puttygen to generate SSH RSA key pair as suggested at Key management. You can find detailed documentation on how to generate key at ssh.com. Save the public key and private (.ppk) file to your local folder.

We will go back to lambda and update the response object with our public key- response[‘PublicKeys’] = [ ‘ssh-rsa AAAAB3NzaC1yc2…..’]; Don’t forget to redeploy the lambda!

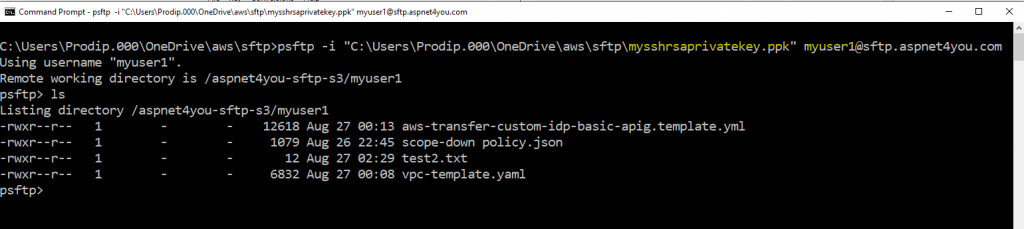

We are ready to connect to sftp service using private key. You can specify identity in the command line-

psftp -i “C:\your-path\aws\sftp\mysshrsaprivatekey.ppk” myuser1@sftp.aspnet4you.com

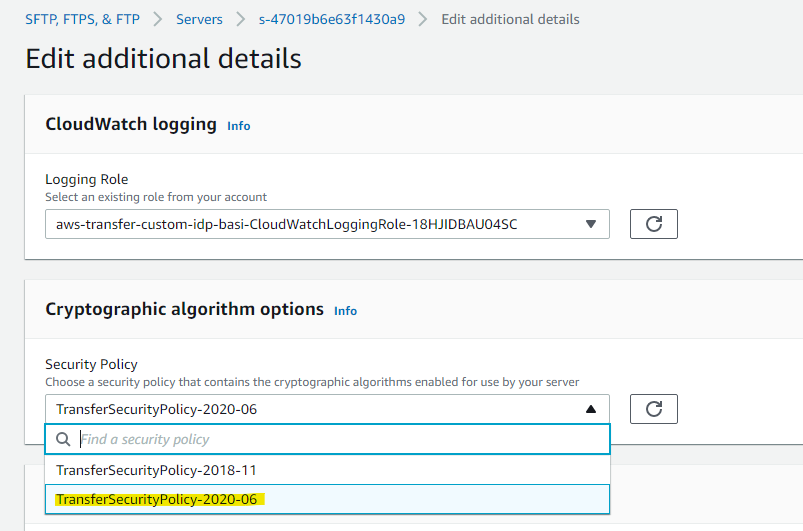

One last thing to talk about is cipher suites used by sftp service. AWS has two options, I tried both and they both works. I selected the latest TransferSecurityPolicy-2020-06. Security policies enable you to select which cryptographic algorithms are advertised by your server when negotiating a connection with SFTP clients. Kex, Mac and SSH Ciphers are used for SFTP.